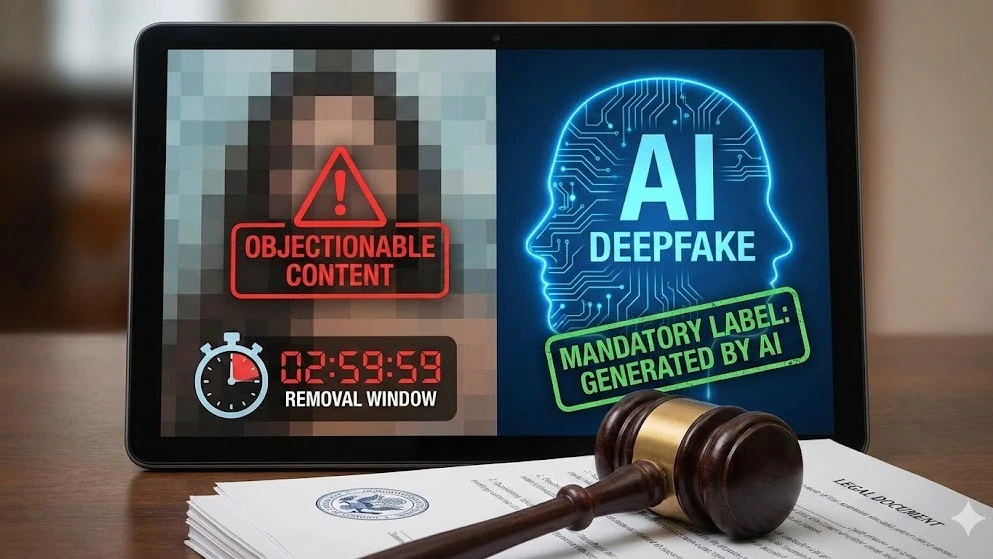

From Feb 20, Social Media must Tag AI Content or Face Action

Related Articles

11 Arrested, Including Directors, After SBL Energy Blast in Nagpur Kills 19 Workers

Nagpur Rural Police have arrested a total of 11 individuals, including senior officials and supervisory staff from SBL Energy Limited, following a tragic explosion...

Aadhar Housing Promoter Sells 75.2% Stake, Raises Growth Concerns

Aadhar Housing Finance has reported that it is on track to achieve a loan growth rate within the range of 20 percent. By December...

नितिन नवीन का वादा: इस्लामपुर का नाम ‘ईश्वरपुर’ करने की घोषणा, विधानसभा चुनाव से पहले परिवर्तन यात्रा शुरू

बीजेपी के अध्यक्ष नितिन नवीन ने हाल ही में पश्चिम बंगाल के उत्तर दिनाजपुर जिले के इस्लामपुर में एक रैली को संबोधित किया। उन्होंने...